Terraform State and in particular Terraform Remote State is an essential but equally hated aspect of Terraform. In this post, I will share my design for a Terraform AzureRM Backend with a set of recommended practices to secure the Remote State destination.

The Terraform AzureRM Backend stores the state as a Blob with the given Key within the Container within the Azure Blob Storage Account. This type of backend supports state locking and consistency checking with Azure Blob Storage native capabilities.

For a general view on State in Infrastructure as Code tools, I recommend the great blog post by Briant Grant.

Design

There are several aspects to consider when designing a resilient and secure remote backend, regardless of the exact type of backend. In this post, I will cover both, the recommended practices to secure the Remote State destination and my AzureRM implementation.

Tip

I put this recommended practices into code (Terraform HCL) in one of my more recent articles: Terraform AzureRM Backend Automation

Access control

Access control includes the network- and the identity-domain. Together, they provide effective access control.

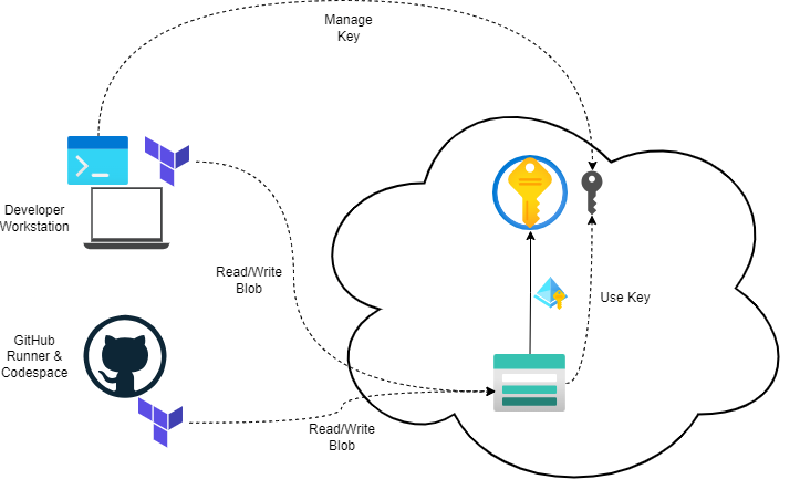

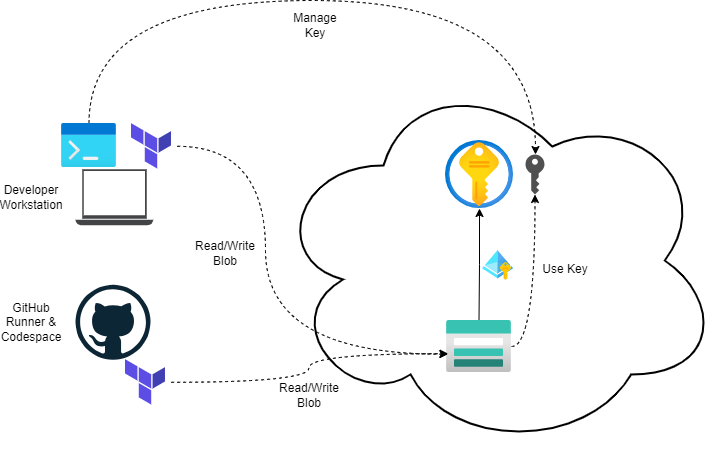

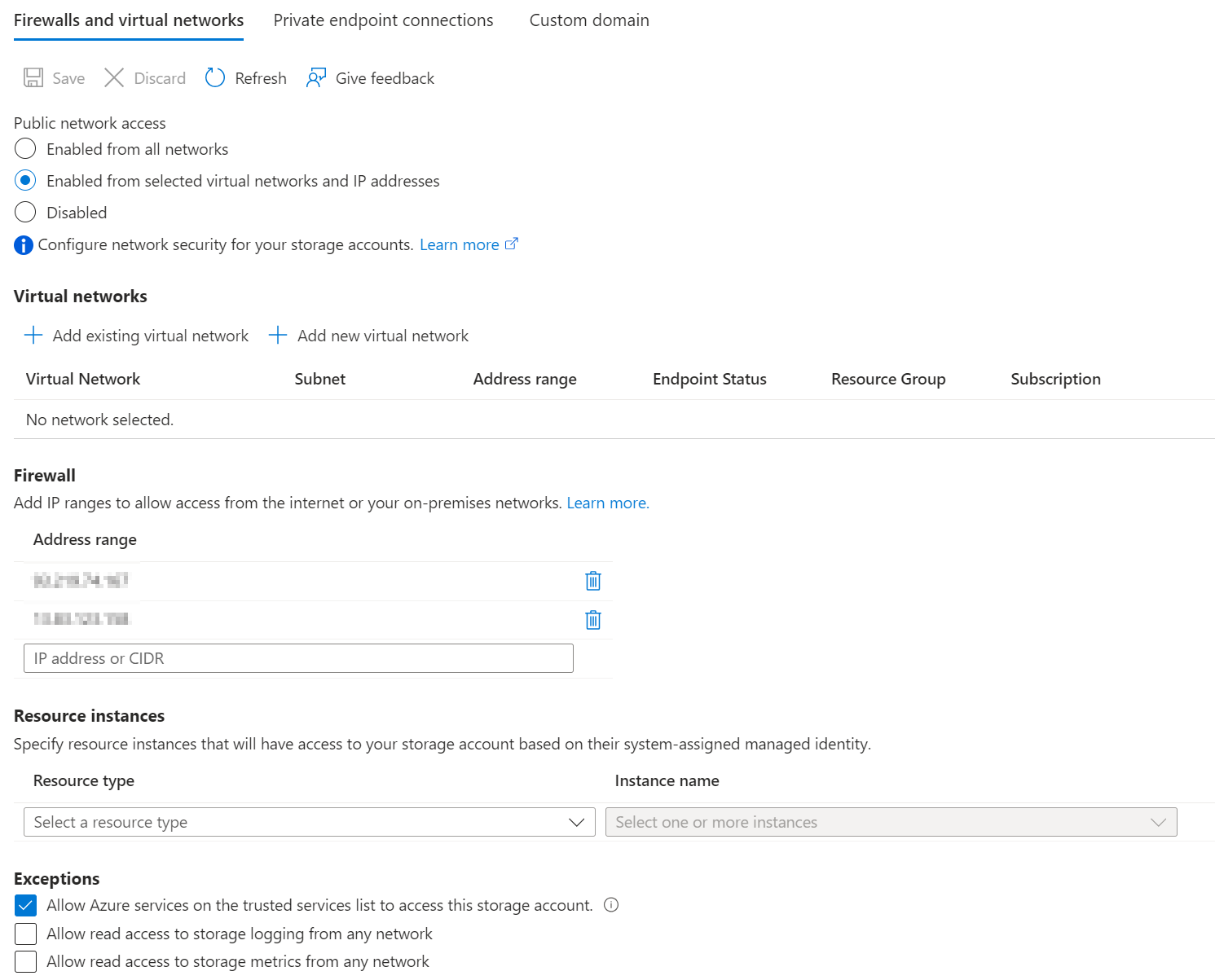

As you can see in the preceding diagram, two components must be considered in this setup - Azure Key Vault and Azure Storage Account.

My key-decisions:

- Both primary resources are configured to use Azure Role Based Access Control (RBAC).

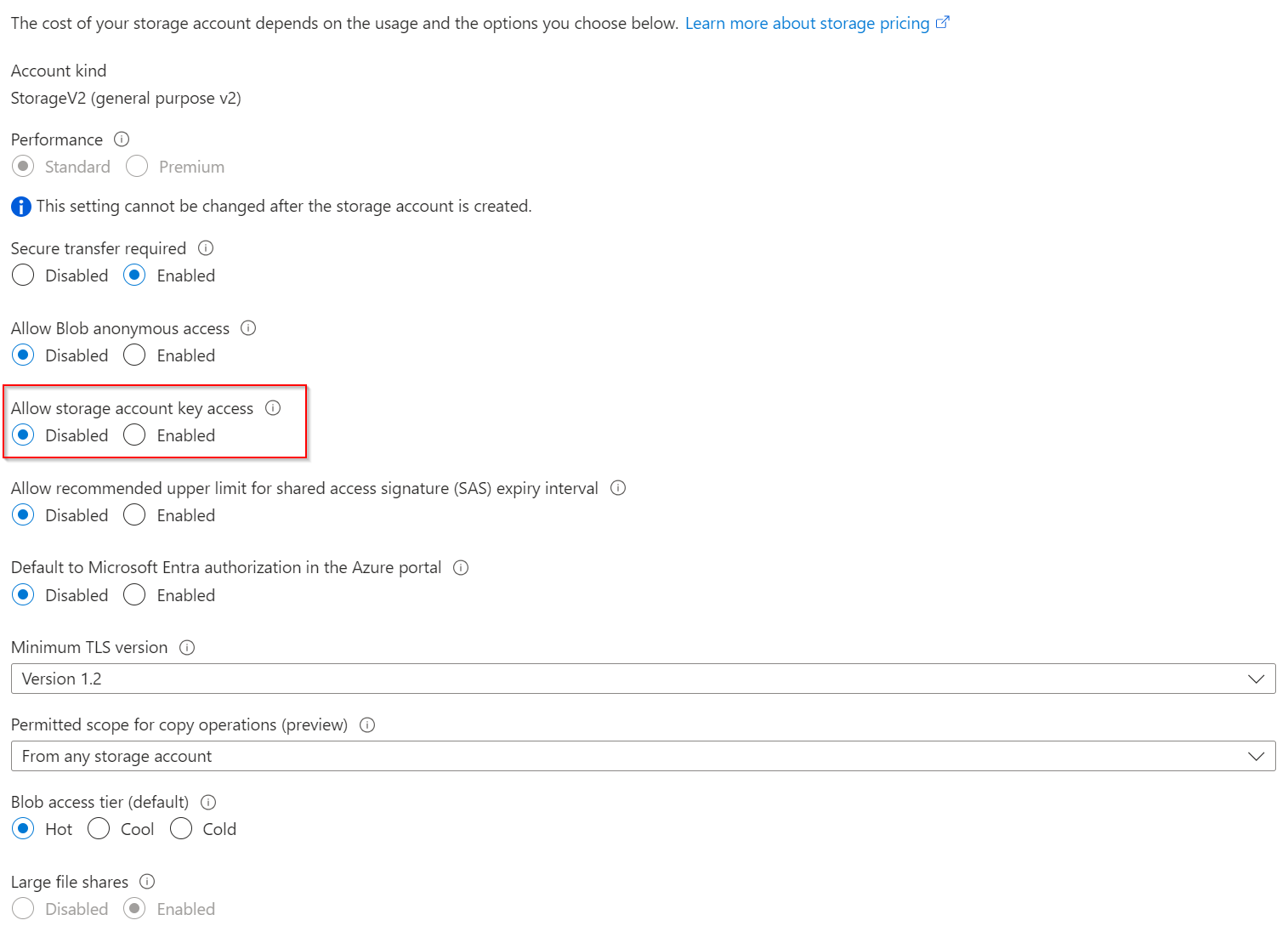

- Azure Storage Account key access is disabled

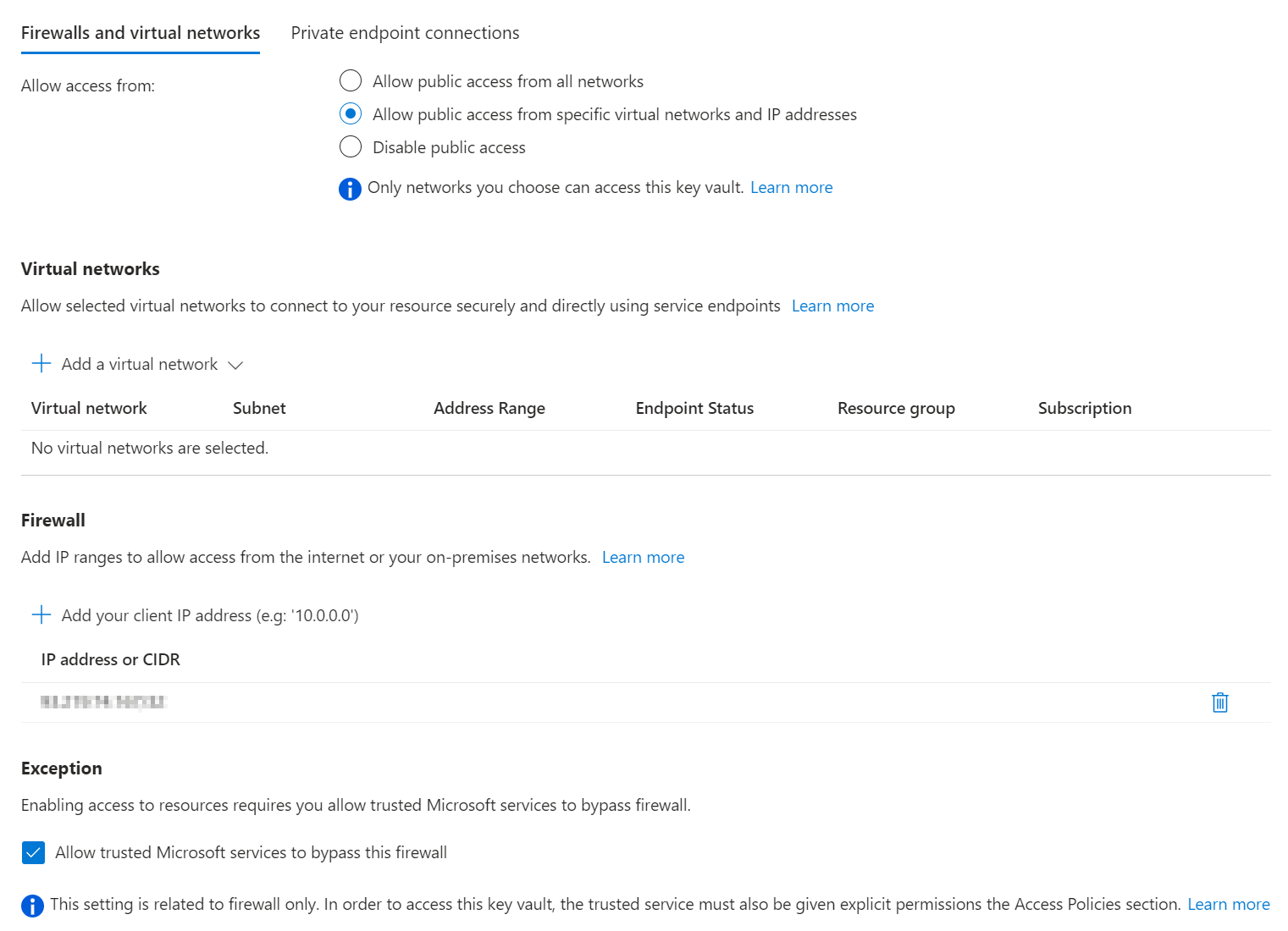

- Azure Key Vault network access is limited to my “Developer Workstation” and trusted Microsoft services (Required for Azure Storage Account Encryptions with Customer Managed Key)

- Azure Storage Account network access is limited to my “Developer Workstation” and trusted Microsoft services. The IP address of the GitHub Runner will be added on-demand to the allow-list (not perfect but works - See also: stackoverflow - Which IPs to allow in Azure for Github Actions?. For enterprise-use you should consider the use of self-hosted runners or the new GitHub Actions Larger runners with fixed IP ranges.

For more details about my implementation of the On-Demand whitelisting, have a look at one of my Terraform CI/CD Workflows: GitHub Action - ValidateAndPlan

The decision to disable key access means that Terraform must use EntraID authentication to access the storage container. The Identity/Service Principal requires at least the “Storage Blob Data Contributor” role for this operation.

|

|

As I use OIDC (Workload Identity Federation) for the GitHub action, the configuration requires even more parameters (or environment variables).

|

|

Encryption

The Terraform State contains confidential information and should therefore always be stored encrypted. To ensure confidentiality, TLS-secured transport must be enforced.

My key-decisions:

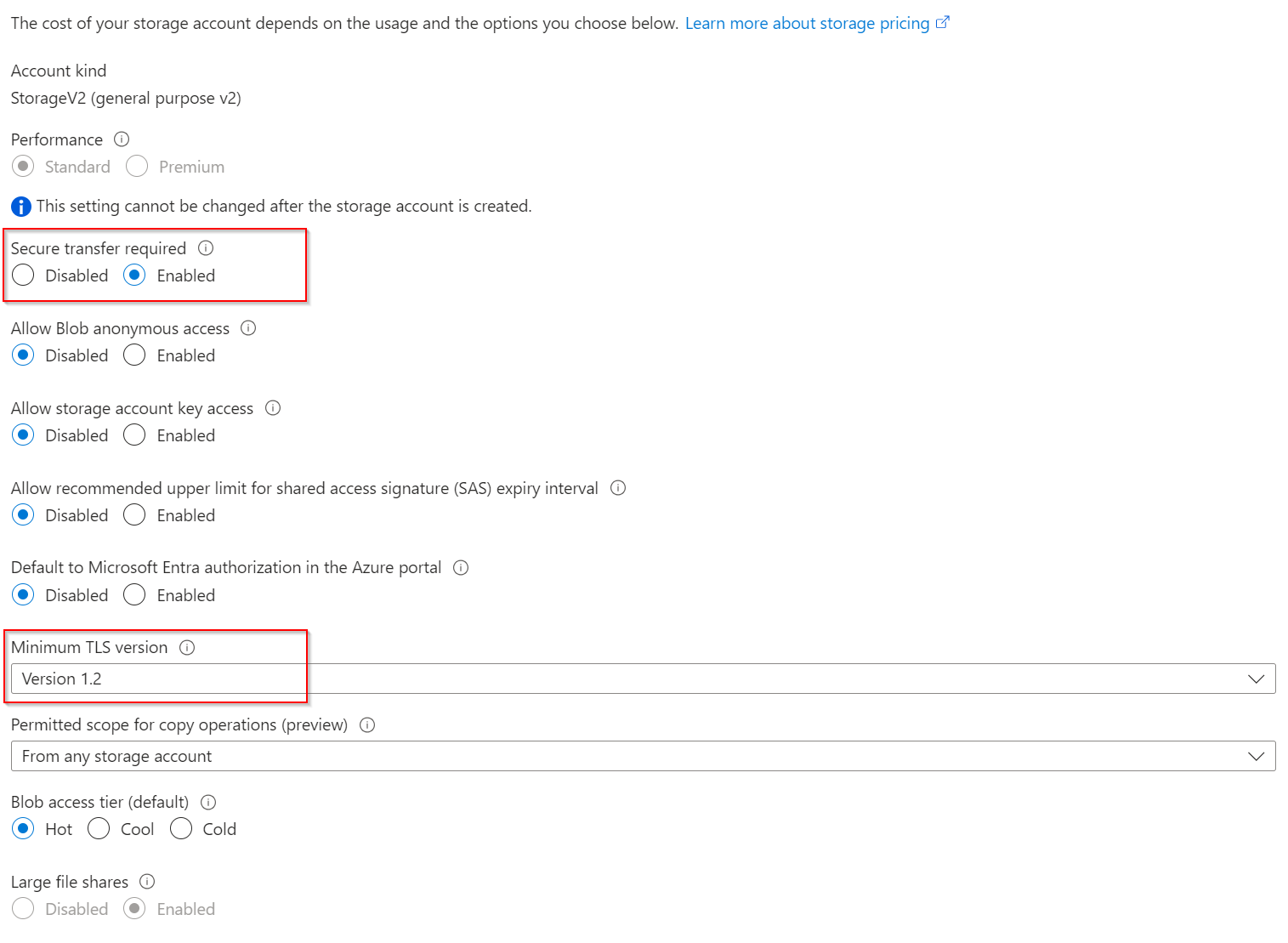

- Both primary resources use TLS-secured transport. However, the Azure Storage Account requires two additional options for hardening.

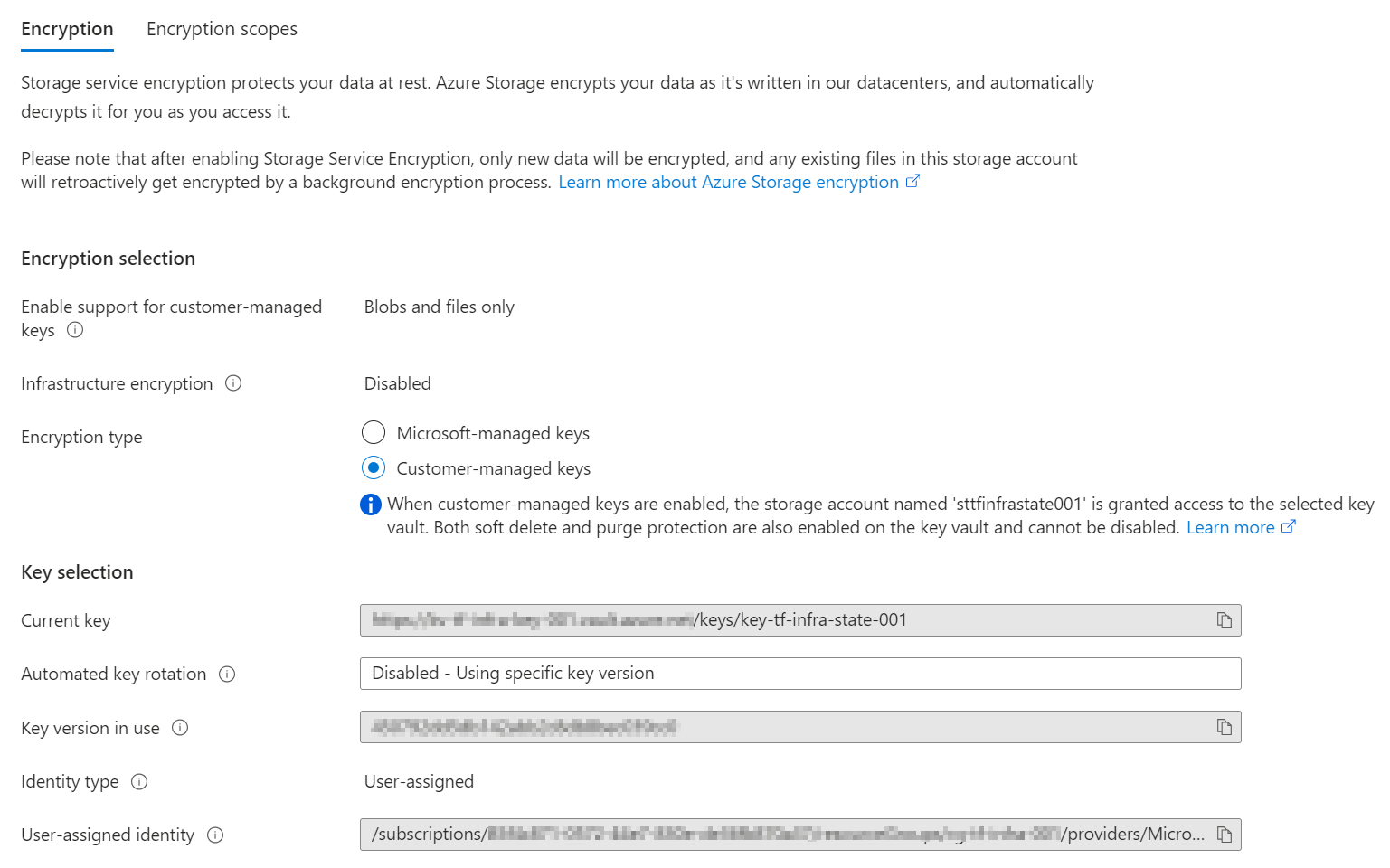

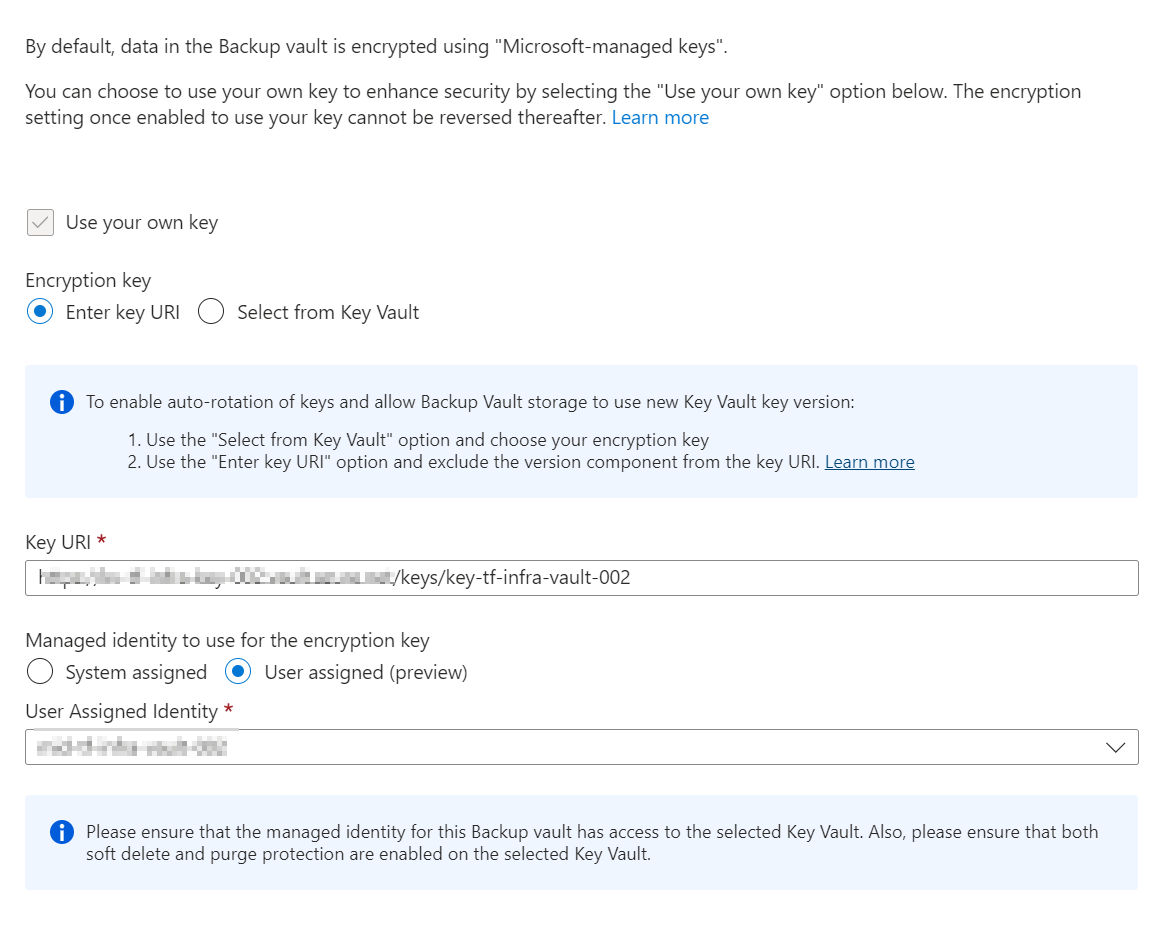

- I prefer Customer-managed keys (CMK) for Azure Storage Account and the Azure Backup Vault Data-at-Rest encryption. Customer-managed keys offer greater flexibility to manage access controls.

- A User-Assigned Managed Identity is used to authenticate the Azure Storage Account and the Azure Backup Vault with their Azure Key Vault.

Additional considerations:

- For enterprise-use, you should implement a proper key-rotation

- Customer-managed keys (CMK) are more effective with an 3rd-Party Key Management System

Data Protection

Appropriate measures should be taken to prevent accidental deletion of the remote state storage (including the resources required for functional access, e.g. encryption keys) and to be prepared for rapid rollback in the event of an emergency.

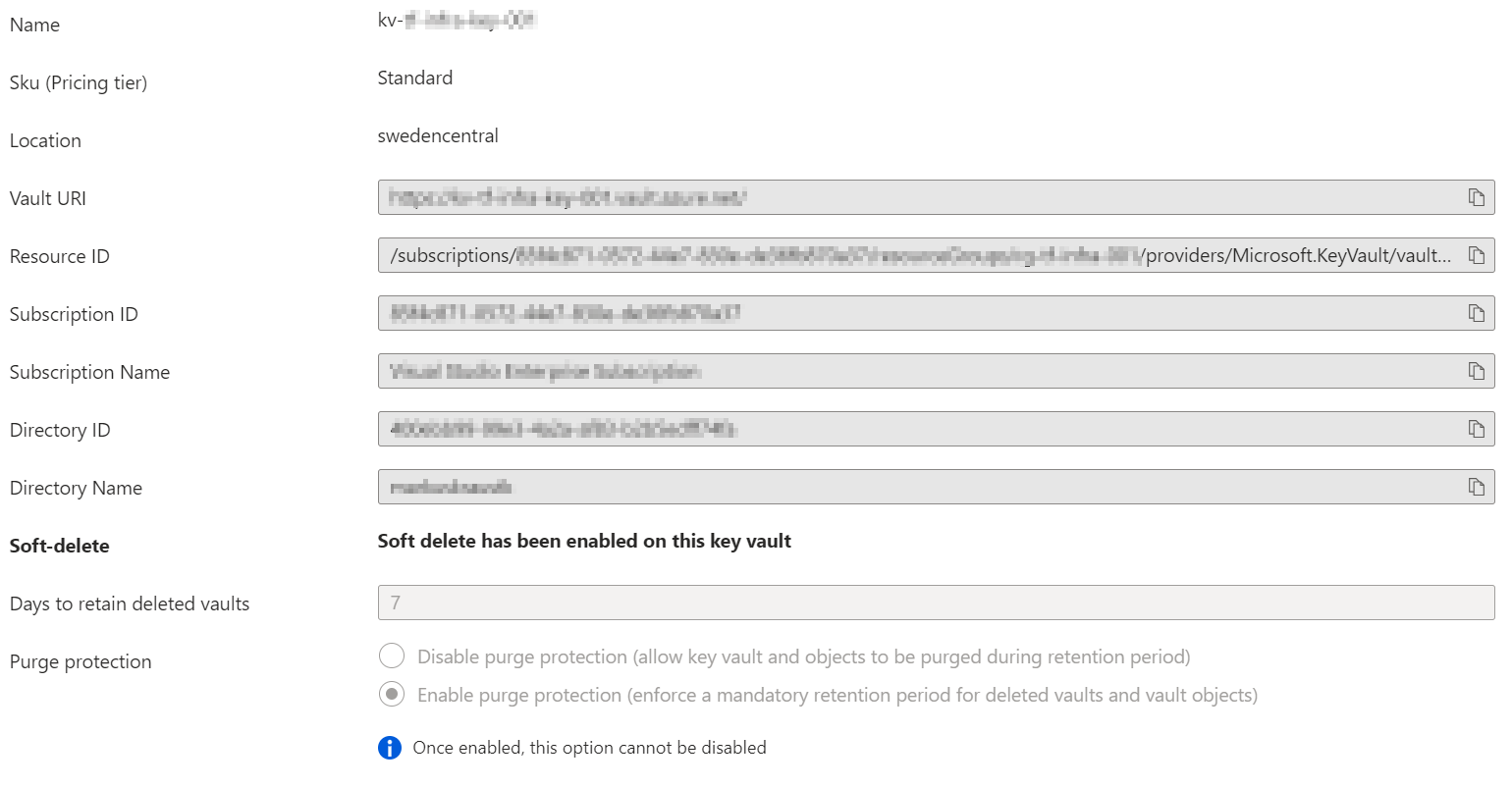

My key-decisions:

- Soft delete has been enabled on the Azure Key Vault.

- Azure Key Vault uses purge protection to enforce a mandatory retention period for deleted vaults and vault objects.

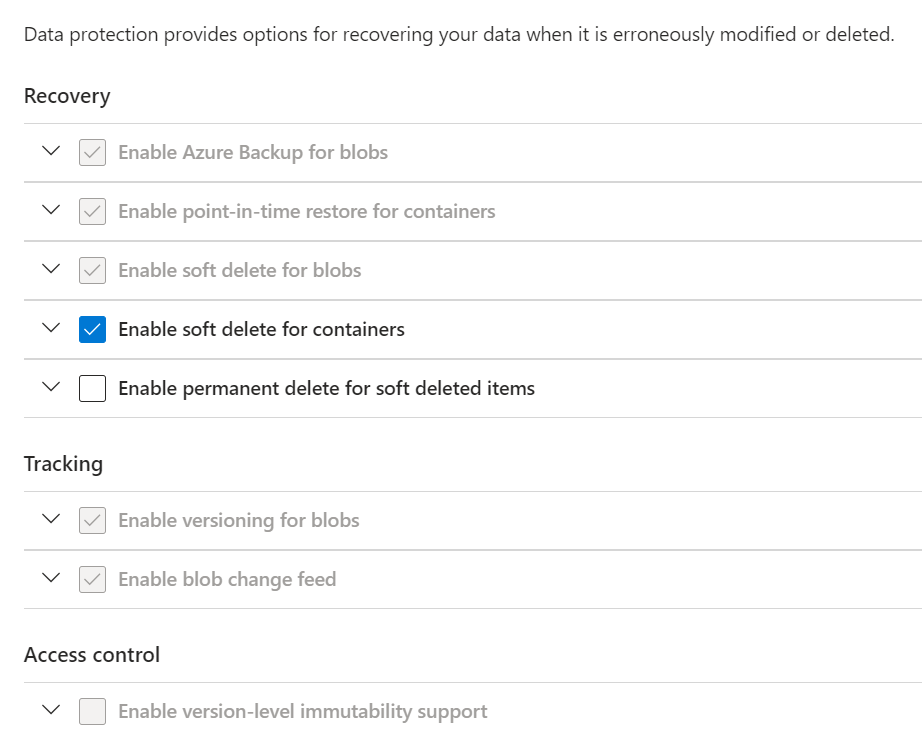

- Azure Storage Account Blob soft delete is enabled

- Azure Storage Account Container soft delete is enabled

- Azure Storage Account Versioning is enabled

- Azure Storage Account point-in-time restore for containers is enabled

- Azure Storage Account blob change feed is enabled

Data Redundancy

Depending on the frequency and criticality of changes to your infrastructure, availability can be a critical issue. As the amount of data involved is usually very small, it can be a little more in terms of redundancy.

My key-decisions:

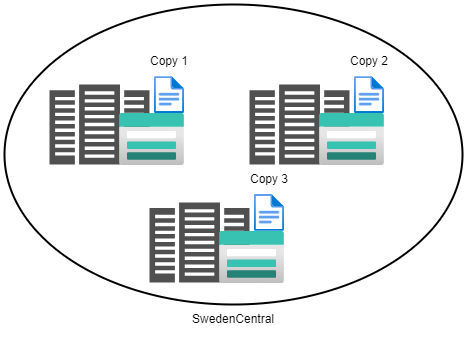

- Azure Storage Account uses Zone-redundant storage (ZRS) replication

- Azure Key Vault is provisioned in a paired region. So, the keys are replicated both within the region and to the paired region.

Zone-redundant storage (ZRS) replicates your storage account synchronously across three Azure availability zones in the primary region. Each availability zone is a separate physical location with independent power, cooling, and networking. ZRS offers durability for storage resources of at least 99.9999999999% (12 9s) over a given year.

Source: Azure Storage redundancy

Additional considerations:

- For a multi-region Terraform deployment Zone-redundant storage (ZRS) replication might not be sufficient

Disaster Recovery

Redundancy and backup & recovery should be a aligned concept. Again, because of the small amount of data, an extra copy or location is welcome. And always remember: A backup without regular testing is useless.

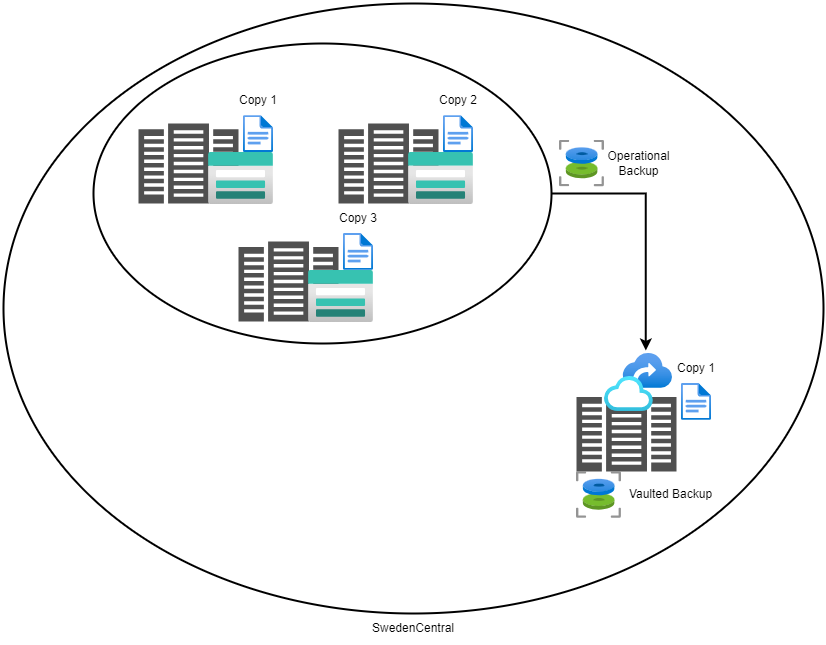

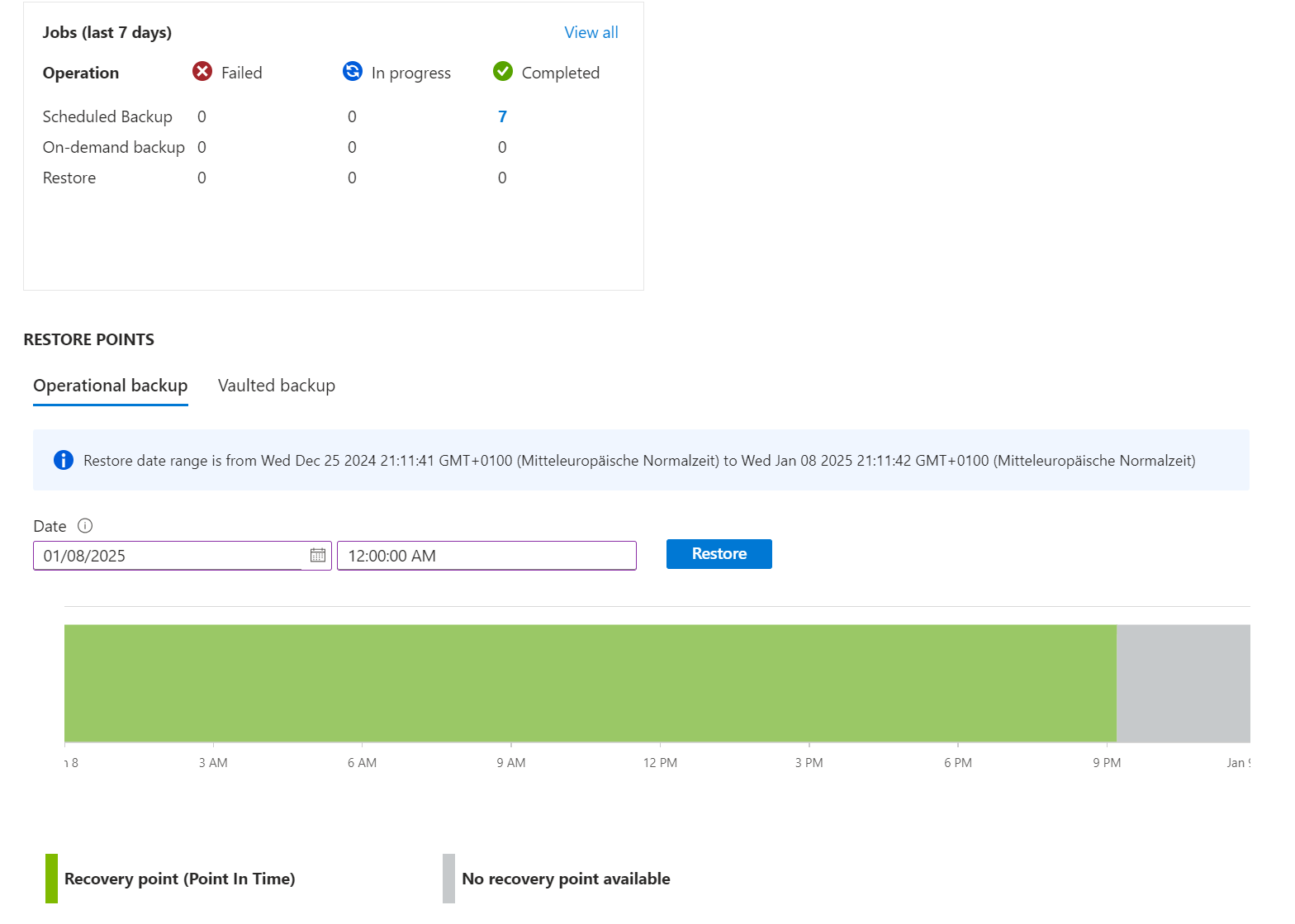

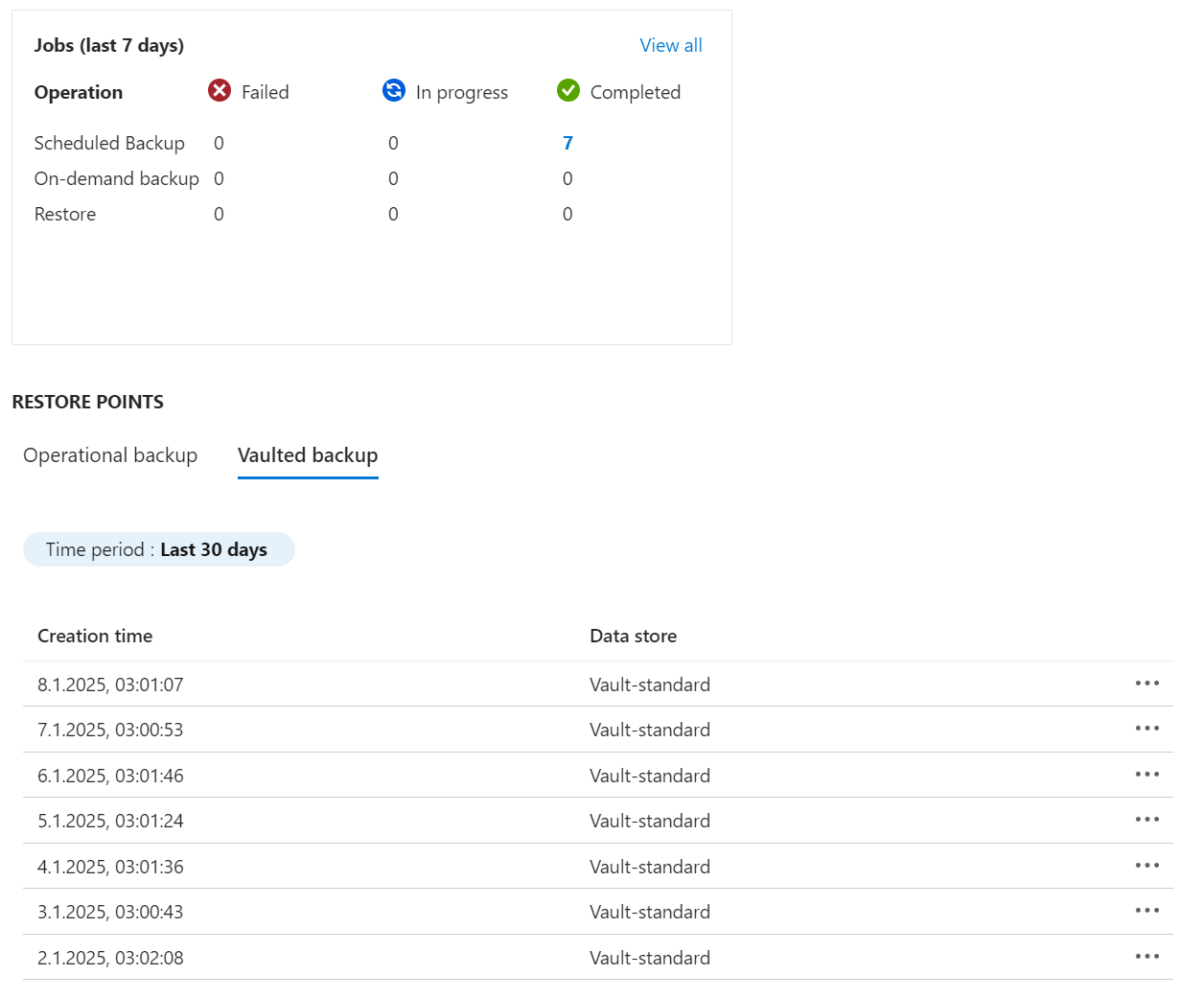

Azure offers two types of backups for your blobs:

Continuous backups: You can configure operational backup, a managed local data protection solution, to protect your block blobs from accidental deletion or corruption. The data is stored locally within the source storage account and not transferred to the backup vault. You don’t need to define any schedule for backups. All changes are retained, and you can restore them from the state at a selected point in time.

Periodic backups: You can configure vaulted backup, a managed offsite data protection solution, to get protection against any accidental or malicious deletion of blobs or storage account. The backup data using vaulted backups is copied and stored in the Backup vault as per the schedule and frequency you define via the backup policy and retained as per the retention configured in the policy.

My key-decisions:

- Azure Backup Vault has soft delete functionality enabled

- Azure Backup Vault is configured with Locally redundant storage (LRS)

- Azure Backup Vault uses a System-Assigned Managed Identity for the Azure Storage Account backup operation (Check Limitation)

- Azure Storage Account uses Operational Backup for local point-in-time restore

- Azure Storage Account with additional Vaulted Backup for a copy independent from the primary storage account

- Azure Key Vault Key backup is performed on each Key rotation. The Key backup is stored in a secure location (e.g. password safe).

Additional considerations:

- Azure Backup Vault can use the immutability feature to protect backups against malicious actors/activity

- Consider a backup concept/solution that allows a cloud provider-independent copy

- Consider a backup concept/solution that allows a copy to be stored in another geographical location.

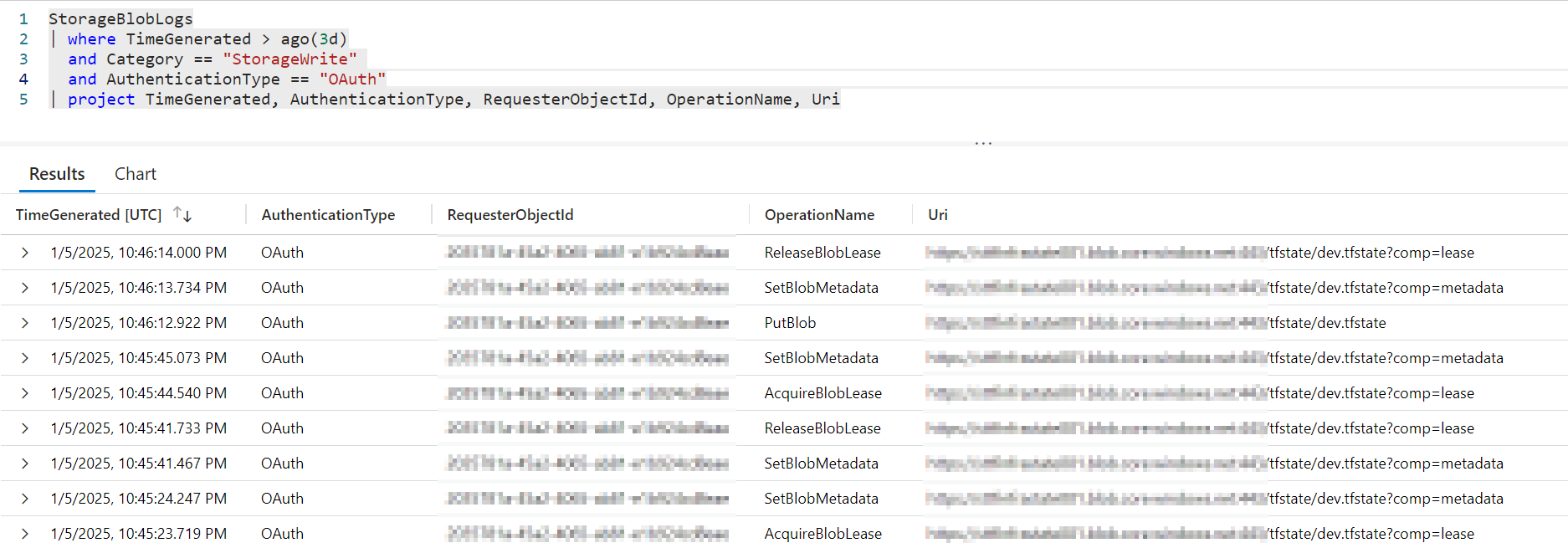

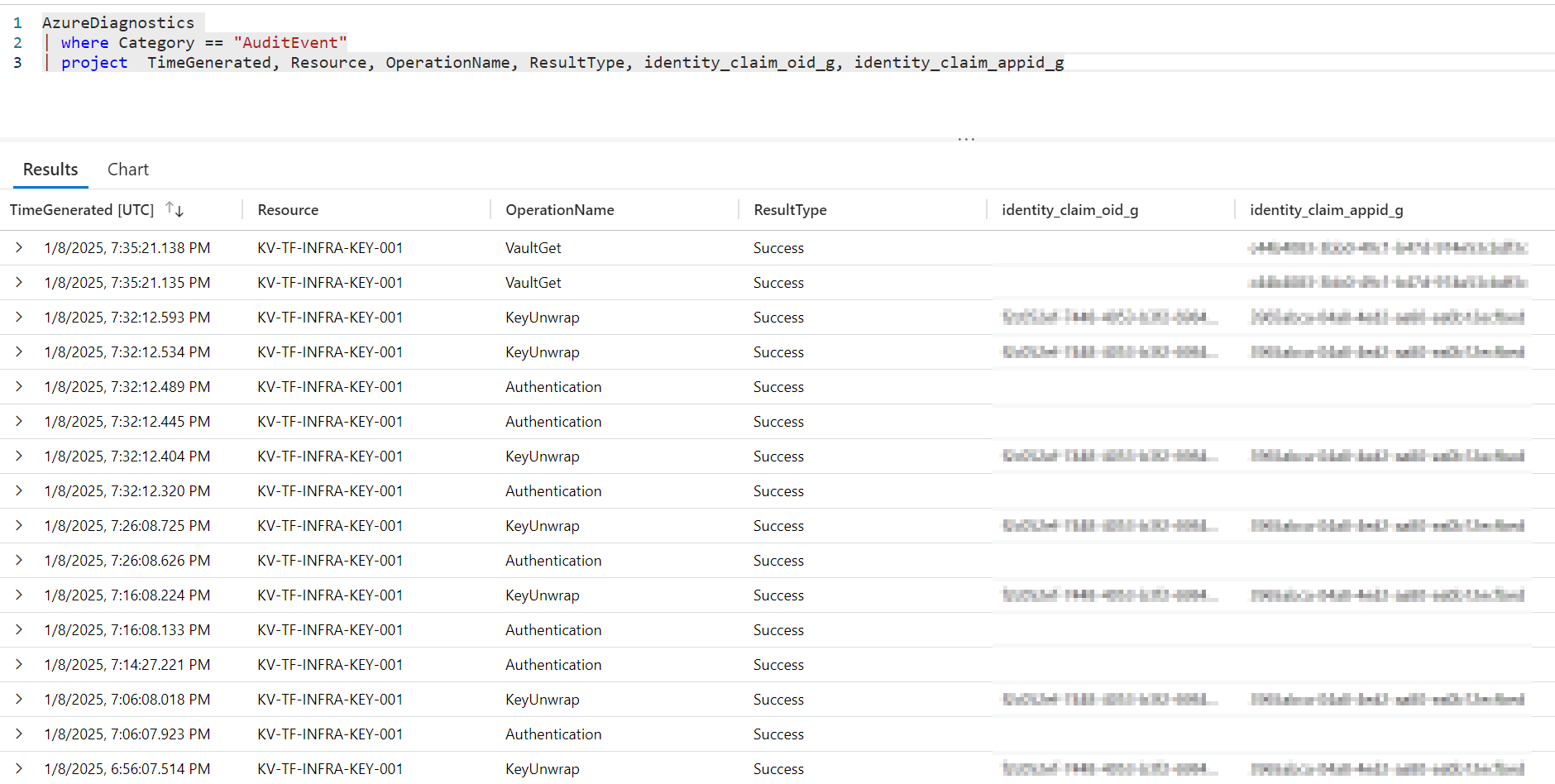

Logging

It is very important to be able to track how requests to the Storage Account (Blog Service) are authorised and how the keys in the Key Vault are used.

My key-decisions:

- Both resources have a diagnostic configuration to collect logs in a Log Analytics Workspace

The following screenshot shows the blob operations of a `terraform apply’ process. The security principal is the Azure Enterprise Application in the GitHub Action via OIDC (Workload Identity Federation).

|

|

The following screenshot shows the KeyVault Activity. The security principal for the “KeyUnwrap” Operation is the Managed Identity used for the Storage Account Blob Encryption.

|

|

Summary

I have now explained many of my own decisions and also suggested further considerations on the subject. Finally, I have one piece of advice:

Terraform Remote State is essential if you are working in a collaborative team and/or with CI/CD pipelines. However, this should not be an issue at the beginning of your Terraform journey. Initially, a local state is more than fine for the first few projects.

References

- https://developer.hashicorp.com/terraform/language/backend/azurerm

- https://learn.microsoft.com/en-us/azure/storage/common/customer-managed-keys-configure-existing-account

- https://learn.microsoft.com/en-us/azure/storage/blobs/security-recommendations

- https://learn.microsoft.com/en-us/azure/storage/blobs/blob-storage-monitoring-scenarios

- https://learn.microsoft.com/en-us/azure/key-vault/general/disaster-recovery-guidance

- https://learn.microsoft.com/en-us/azure/backup/blob-backup-overview